AI and automation in the tech industry are rapidly accelerating and for UX, the AI future is nearly here. In this article I’ll give you a blueprint with important considerations to help your UX team adjust how you create AI-supported user experiences.

Here’s the big picture: Venture funding in generative AI startups has grown from $4.8B in 2022 to $12.7B in the first half of 2023. And 72% of business leaders want AI adoption within 3 years. Workers are making it happen by adding AI to their jobs, even though there are inherent risks and biases. Lastly, with a growing number of AI tools available, more users can augment, automate, or bypass product and service experiences.

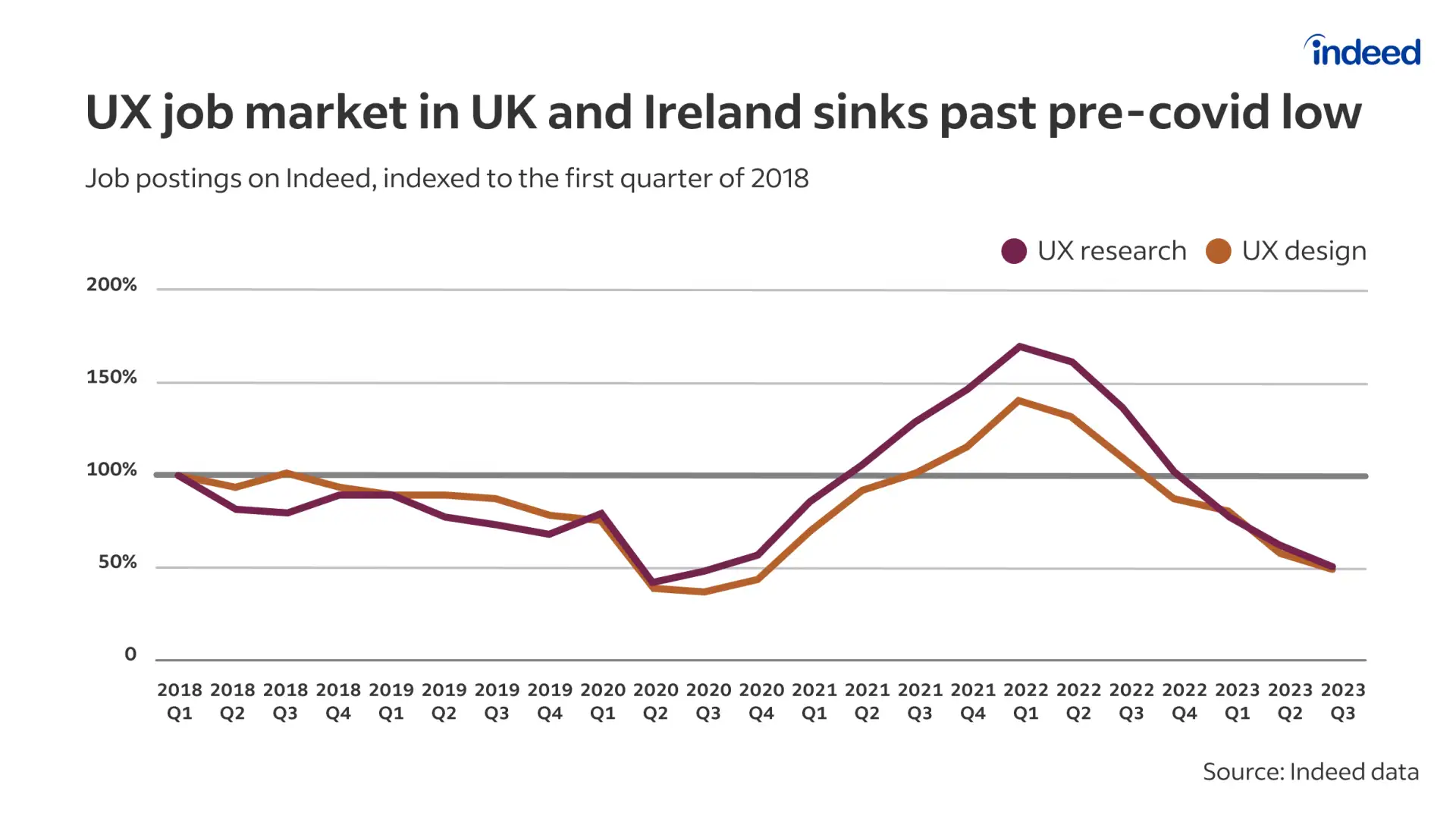

What does all of this mean for the UX industry?

Well, to start, UX practitioners are facing some common challenges with AI like:

- Staying updated on AI tech and its benefits and risks.

- Using AI tools to streamline UX processes.

- Choosing when to apply AI in customer experiences.

- Using AI for idea generation and initial product concepts.

- Building user trust in automated experiences.

UX leaders and their teams need to work on getting ready for these changes. Here’s some insight from a colleague of mine:

“We’re peak hype cycle right now. It’s an exciting, uncharted problem space, branding opportunity, and value-generating incantation on wall street. As it becomes the new status quo for technology businesses like ours, first-mover advantage will be overshadowed by creative cultures that prioritize human needs and mitigate harmful externalities. It’s critical that designers temper impulses to hop on the hype train, and force honest discourse with decision-makers that’s grounded in empathy, nuance, and careful evaluation.” — Mark Laughery, Lead UX Designer

How can your team do that? Here are my recommendations, with plenty of supporting resources.

Start with AI learning

For UX folks just starting with AI, let’s begin with the main concepts.

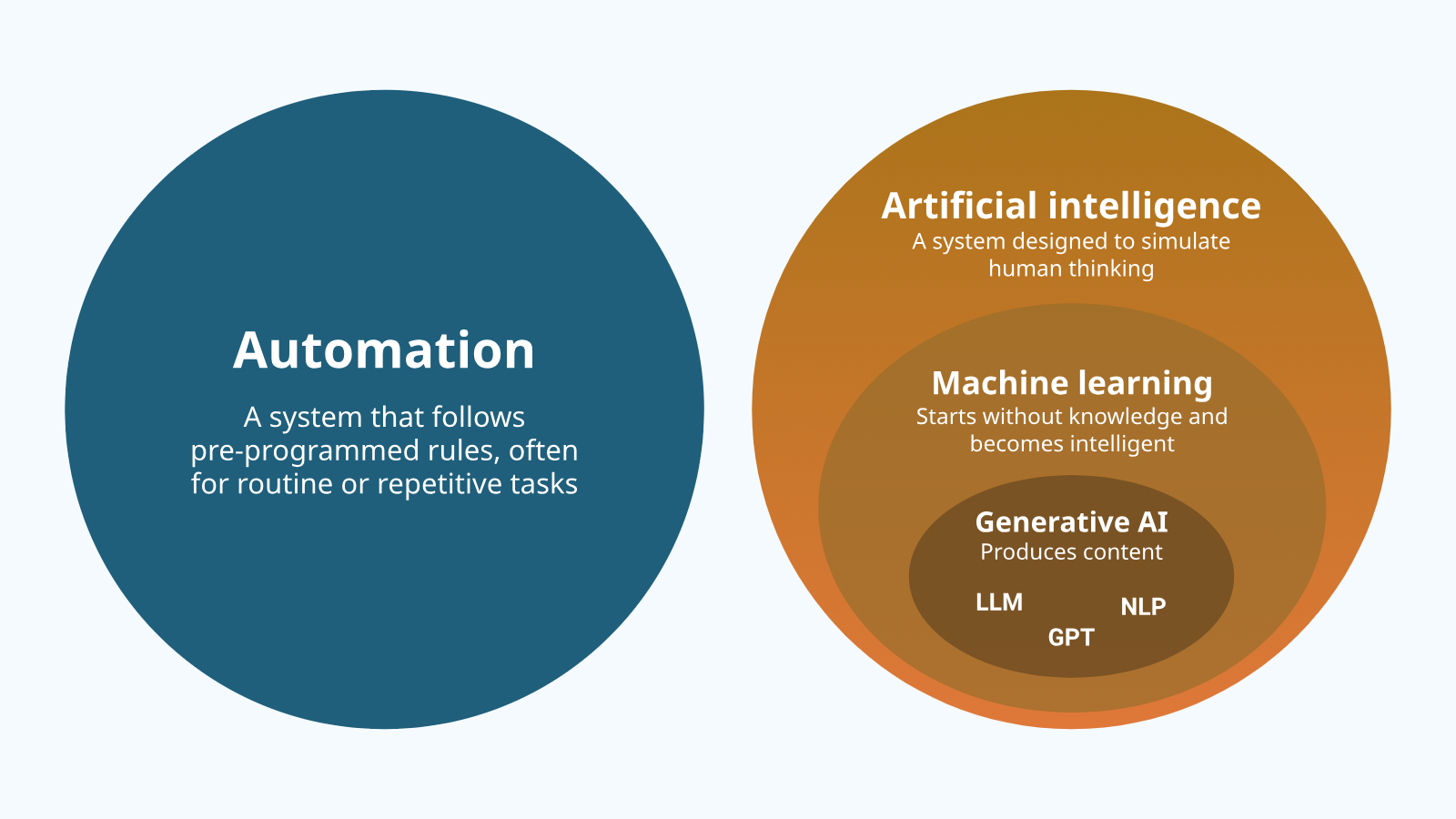

Think of automation and AI as separate. Automation is a system following set rules, often for routine tasks. And artificial intelligence (AI) is a system designed to simulate human thinking.

Because human thinking is difficult to simulate, many components comprise AI. Machine Learning (ML), a system that starts without knowledge and becomes intelligent, draws from specific information sources. Let’s define those, too:

- Generative AI (GenAI): AI that can produce content.

- Large Language Model (LLM): AI models designed to understand and generate human-like text by learning from vast amounts of data.

- Tasks: Translation, sentiment analysis, text summarization, chats.

- Natural Language Processing (NLP): Machine learning technology that breaks down and interprets human language.

- Tasks: Filtering emails, sorting feedback, predicting text, auto-correct, chatbots, translation.

- Generative Pre-trained Transformer (GPT): A type of LLM that processes and generates text in a context-aware manner.

- Tasks: Text completion, summarization, answering questions, translation.

- Large Language Model (LLM): AI models designed to understand and generate human-like text by learning from vast amounts of data.

Big tech companies have created helpful explanations and rules for how AI ideas work and can be used ethically and responsibly. Start with these: Google, Microsoft, Salesforce, IBM, LinkedIn, and IEEE.

For teams venturing into AI or considering it, regular customer-focused learning is helpful. Choose from the following:

- Share self-learning resources such as AI courses, webinars, articles, and podcasts.

- Study relevant real-world AI case studies.

- Invite knowledgeable guest speakers.

- Arrange workshops to clarify automation opportunities.

- Gather user feedback on AI through new research.

- Guide the team through IBM’s design-thinking AI team essentials.

- Schedule recurring sessions focused on customer risks and benefits by scenario.

- Talk with internal data scientists if they’re available.

- Group study with your peers on foundational knowledge.

- Hold a monthly reading club to keep up-to-date with trends and research papers.

Adapt these suggestions to your team’s working style and needs.

Evolve UX processes with AI

UX processes and tools help identify important user issues and create useful solutions. How can AI be integrated into UX effectively?

“When used responsibly, AI can be a powerful partner. It can streamline repetitive and draining tasks while allowing humans to excel in areas where they provide unique value, such as imagination, creative direction, and human connection. If nothing else, people will always be needed to edit and curate the output of AI systems for their target users and audiences.” — Adrienne Downing, Senior UX Researcher

AI is beneficial for routine tasks, such as producing wireframes using established UI elements. But the advantages of using AI to provide suggestions based on condensed data that shapes a product’s direction might not always outweigh the potential drawbacks. This remains uncertain for now.

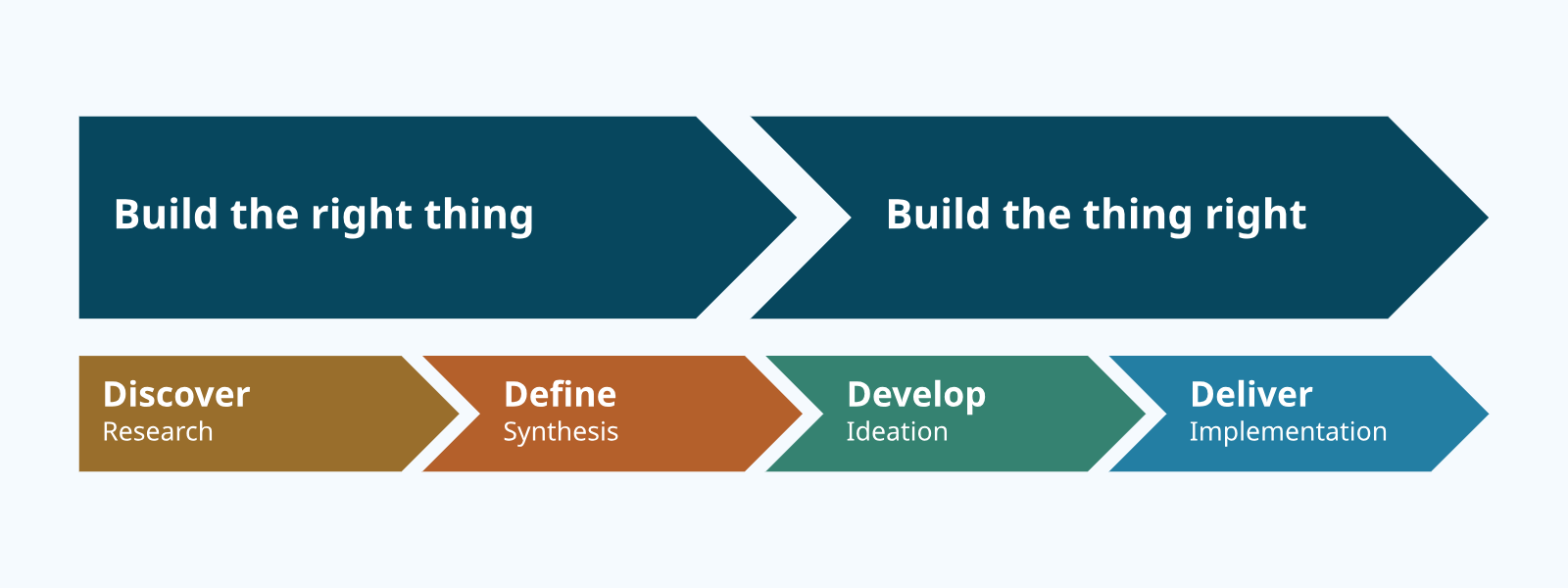

Nonetheless, let’s examine the increasing array of AI-driven UX tools and assess their strengths and weaknesses within the UX double diamond.

Get the right tool for the job

AI tools exist that can co-pilot or take over end-to-end processes. We aren’t far away from being able to set up one or more AI Agents — like AgentGPT, MindOS, 4149.AI, AutoGPT — to run a prescriptive UX process. And we’re a little further away from enabling AI to determine and guide an emergent UX process. Here be dragons, but don’t stop looking away from this space.

In the meantime, here are a few lists of tasks and the AI tools that can help you refine your process at each stage.

Build the right thing: discover and define

In the discover phase, the team gathers unstructured findings from data, research, hypotheses, assumptions, unknowns, and possibilities. In the define phase, the team groups insights into themes, opportunity areas, and “how might we” questions to produce a brief.

Example AI tasks and tools:

- Conduct user interviews (RhetorAI, UserZoom)

- Automate surveys (Pollfish, HotJar)

- Compile lit reviews (SciSpace Co-Pilot)

- Collate client risks (Kaizan)

- Streamline note-taking (Otter, Subito, Enote)

- Synthesize findings (Reframer, UserEvaluation, Chattermill, Usabilia, Brainpool)

- Analyze comments (Comments Analytics)

- Generate summaries (GeniePM, Stellar, Kraftful, Userdoc)

Benefits:

- Compile broad inputs and data

- Automate continuous discovery insights

- Accelerate speed to recommendations

- Improve data collation and analysis

- Auto-transcribe recordings and transcripts

Risks:

- Incomplete data sets may increase bias

- Reduced human empathy and errors in discerning user intent

- Can’t ensure these are the right research and interview questions

- Can’t ask strategic follow-up questions

- User data privacy exploitation and security issues

Build the thing right: develop and deliver

In the develop phase, the team generates ideas and evaluates which are the most promising that solve the core user problem. In the deliver phase, the team builds, tests, and iterates on the solution, making it a reality and moving from uncertain to certain.

Example AI tasks and tools:

- Generate copy (ChatGPT, NotionAI) and wireframes (WireGen)

- Automate Figma work (Automator, Autoname, Ando)

- Compile feedback (Resonote)

- Simulate testing (VisualEyes)

- Automate localization (Rask, Translator)

- Recommend optimizing tests (UserTesting)

- Streamline design to code (Bifrost, Rendition)

Benefits:

- Boost speed from concept, to test results, and time-to-market

- Streamline team collaboration and feedback loops

- Reduce errors and biases with consistent testing

- Diversify design testing and insights at scale

- Automate complex testing scenarios

Risks:

- Missed critical findings due to skipping or simulating research

- Difficulty with iterative user-centric innovation

- I recommend checking for approval with stakeholders like legal and UX operations teams to use AI tools in your product discovery and development process.

Evolve customer experiences with AI

Next, we’ll talk about essential steps to prepare UX for guiding AI and automation in customer experiences.

Anchor decisions to AI principles

Adopt or create AI and automation principles and clearly define their role in decision-making with leadership.

Explore what large tech firms have published: IBM, Google, Apple, Microsoft, and LinkedIn. And use UX of AI, which is a helpful compilation of resources and principles.

At Indeed, the AI ethics team released AI Principles. The UX team introduced generative AI guardrails thanks to Mark Laughery, and Krissy Groom, and Adrienne Downing drafted emerging AI and automation UX guidelines. The following pillars emerged from our work:

Make it user-centric.

- Provide control.

- Set expectations.

- Mitigate errors.

- Be ethical.

- Consider user impact.

“As UX practitioners, let’s make sure we put the users front and center by considering the user needs, risks to user trust, and implications to all users in your ecosystem.” — Daowz Sutasirisap, UX Manager

Evaluate features on the automation spectrum

Automation is a spectrum in which a human and system act on and inform each other, depending on the level of autonomy the human or machine has.

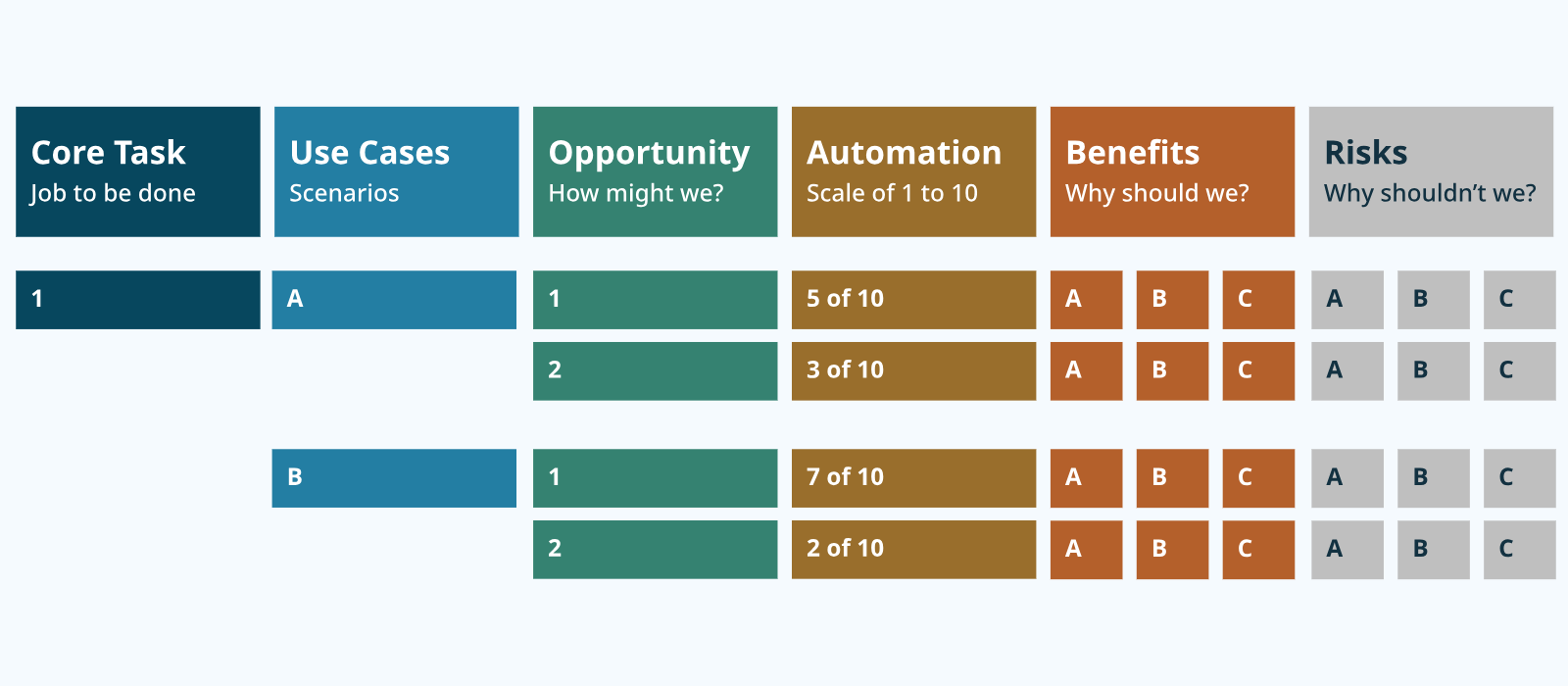

Start auditing your automation capabilities. List core user tasks and use cases, then map existing features and brainstorm new opportunities. Score the level of automation using a 1 to 10 scale. List important benefits and risks with relevant research evidence.

“Analyzing core use cases and research insights helps to uncover where user needs and company goals converge. It’s important to find that balance when considering where and how to introduce automation features across a user’s journey.” — Kelsey Hisek, Sr. UX Designer

Whether you’re looking to add new automation points, remove harmful ones, or move existing features up or down on the automation spectrum, use research evidence to influence which tests get prioritized.

In the automation spectrum list below, 10 represents no input from the human and 1 represents no input from the system:

- The system offers no assistance and the human must make all decisions and actions

- The system offers a complete set of decision/action alternatives

- Narrows the selection down to a few

- Suggest one alternative

- Executes that suggestion if the human approves.

- Allows the human a restricted time to veto before automatic execution

- Executes automatically, then necessarily informs the human

- Informs the human only if asked

- System informs the human, only if it decides to

- The system decides everything, acts autonomously, ignoring the human

“When considering new types of automation to add, concept tests are a great research method to employ to understand where your users perceive the most benefit and where they have concerns. You can address concerns by using lower levels of automation on the spectrum to maintain human control where it’s needed most.” — Kathryn Brookshier, UX Research Manager

Focus automation on building trust

Trust is a choice and a relational skill. Since it’s based in vulnerability and expectations, it’s critical to consider trust when adding new automation features.

“When we look at the moments that matter within a journey, we need to understand areas within our products and services that truly require human relationship for trust-building. Just as important is understanding where and how a system can effectively outperform human ability, yet also embody human elements of adaptability and authenticity.” — Sarah Gagné, Sr. UX Researcher

At Indeed, we’ve conducted and analyzed over 30 research studies yielding insights on trust. Consider conducting research on users’ trust in automation within product experiences, service interactions, and engagement with other users (if applicable).

Pay attention to insights regarding the four trust-builders: comfort, connection, confidence, control. Also, mind the trust-breakers like ability gaps, inflexible processes, risk aversion, inauthentic or absent human touch, bias, and data misuse.

Based on the findings, recommend what is most useful in your teams’ context, such as:

- Measuring an automation-trust customer experience metric.

- Performing a trust-focused gap analysis with automation features.

- Tracking trust-builders and trust-breakers in automation-focused research studies.

- Creating actionable feedback loops within automation features to identify trust issues and take corrective actions.

Align automation with users’ expected outcomes

Ultimately, when deciding to add or enhance automation features, the main focus should be on improving user outcomes that the team prioritizes, based on evidence.

Use these questions as a guide to ensure you’re moving in the right direction to meet users’ expected outcomes:

- Do users trust and use automation to achieve value for their core needs?

- Does it reduce actual and perceived effort and improve satisfaction?

- Does it lower risks and avoid introducing new ones?

- Is the task suitable for automation because it’s boring and repetitive — or not because it’s complex, enjoyable, and high-stakes?

- How complex is the task? Is it owned and completed by one or multiple users?

- How much training and control do users want or need for the automation?

The future of AI is UX

Above all else, UX has the explicit responsibility and capability to hold businesses, users, and society to high ethical standards when it comes to using AI-powered products and services to achieve goals. If you don’t know where to start, try a risk assessment. Plus, UXers can help users identify whether their goals and outcomes are healthy or not for themselves and society in the first place.

There’s more to explore about UX and AI — but for now, we’ll stop here.

A big thanks to Indeed’s UX peers whose work I mentioned, and those I didn’t. It’s been instrumental in guiding the impact of AI and automation in the job seeker and employer experiences at Indeed. Learn more about some of them: Adrienne Downing, Mark Laughery, Daowz Sutasirisap, Kathryn Brookshier, Sarah Gagné, Krissy Groom, Kelsey Hisek, Michelle Sargent.

This guide may not be comprehensive, but it’s the perfect link to share with an emergent leader or someone trying to advocate for more ethical AI use in the workplace. Help spread the knowledge by sharing it with your networks, and stay ready for the future of UX and AI.

—

Illustrated by Morgan Jamison